AI DevOps Agent: From Prompts to Autonomous Infrastructure Creation

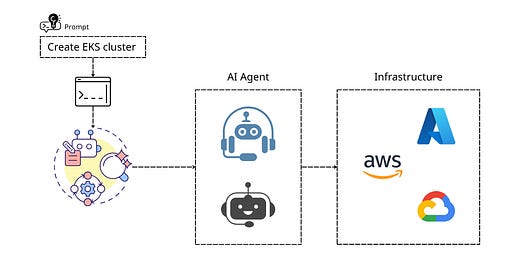

Transform from manual infrastructure management to directing intelligent agents that understand, plan, and execute complex deployments.

💡 Author’s Note: This article presents a conceptual framework and vision for AI-driven infrastructure management. While the foundational technologies (LLMs, vector databases, prompt engineering) are real and available today, the complete autonomous agent system described here represents my ideas for the future evolution of DevOps tooling. Consider this a roadmap and inspiration for what’s possible as we combine existing AI capabilities with infrastructure automation.

What if you could simply say “Deploy a production-ready EKS cluster for my e-commerce app” and watch an AI agent automatically break down the task, create all necessary components, and deploy everything with best practices?

This isn’t science fiction. You’re about to learn how to build your own AI DevOps agent that evolves from simple prompt responses to autonomous infrastructure management.

Welcome to the evolution: From Prompts to Agents.

The Evolution: From Chatbot to Agent

Most people think AI assistance stops at generating code snippets. But that’s just the beginning. Here’s the journey we’re taking:

Stage 1: Simple Prompts → Generate individual configurations

Stage 2: Context-Aware AI → Remember your preferences and standards

Stage 3: Intelligent Agents → Break down complex tasks and execute them autonomously

Let me show you how to build each stage, culminating in an agent that can deploy entire infrastructure stacks from a single instruction.

Stage 1: Your First AI Assistant

Every great agent starts with a solid foundation. Let’s build your personal AI assistant that knows your infrastructure preferences.

Building Your AI’s Memory

Create a knowledge folder - this becomes your AI's brain:

File: infrastructure-standards.txt

My AWS infrastructure standards:

- Always use private subnets for workloads

- Enable VPC Flow Logs for security monitoring

- Use IAM roles with least-privilege access

- All resources tagged with Environment, Project, Owner

- Enable encryption at rest and in transit

- Use managed services when possible (RDS, EKS, etc.)File: eks-requirements.txt

My EKS cluster requirements:

- Use managed node groups with t3.medium instances

- Enable cluster autoscaler for cost optimization

- Configure AWS Load Balancer Controller

- Enable CloudWatch Container Insights

- Implement pod security standards

- Use spot instances for non-critical workloadsThe Memory System

Now let’s create the code that gives your AI permanent memory:

import chromadb

import google.generativeai as genai

class InfrastructureAI:

def __init__(self):

# Create persistent memory

self.client = chromadb.PersistentClient(path="infra_memory")

self.collection = self.client.get_or_create_collection("standards")

def learn_from_files(self, knowledge_folder):

"""Teach AI your infrastructure standards"""

for filename in os.listdir(knowledge_folder):

if filename.endswith(".txt"):

with open(f"{knowledge_folder}/{filename}", 'r') as file:

content = file.read()

# Convert to embedding and store

embedding = genai.embed_content(

model='models/text-embedding-004',

content=content

)['embedding']

self.collection.add(

ids=[filename],

documents=[content],

embeddings=[embedding]

)

def recall_standards(self, query):

"""Find relevant standards for a query"""

query_embedding = genai.embed_content(

model='models/text-embedding-004',

content=query

)['embedding']

results = self.collection.query(

query_embeddings=[query_embedding],

n_results=3

)

return results['documents'][0]Stage 2: Context-Aware Infrastructure Generation

Now your AI can remember your preferences and apply them automatically:

def generate_infrastructure(self, description):

"""Generate infrastructure based on description and standards"""

# Recall relevant standards

standards = self.recall_standards(description)

# Create context-aware prompt

prompt = f"""

Role: Act as a senior DevOps engineer with expertise in AWS and Terraform.

Context: {description}

My Standards: {standards}

Action: Generate Terraform configuration that follows my standards.

Format: Provide separate files (main.tf, variables.tf, outputs.tf).

Tone: Include comments explaining security and cost decisions.

"""

# Generate with context

response = genai.GenerativeModel('gemini-2.0-flash-exp').generate_content(prompt)

return response.textStage 3: The Breakthrough — Autonomous Agents

Here’s where it gets exciting. Instead of just generating code, we’re building an agent that can:

Understand complex requirements

Plan the implementation strategy

Execute tasks step-by-step

Validate results

Adapt based on feedback

The Agent Architecture

class InfrastructureAgent:

def __init__(self):

self.memory = InfrastructureAI()

self.tools = {

'terraform': self.run_terraform,

'aws_cli': self.run_aws_cli,

'kubectl': self.run_kubectl,

'validate': self.validate_deployment

}

def break_down_task(self, user_request):

"""Agent breaks down complex requests into actionable steps"""

planning_prompt = f"""

You are an expert DevOps agent. Break down this request into specific, actionable steps:

Request: {user_request}

Available tools: {list(self.tools.keys())}

Create a step-by-step plan with:

1. What needs to be created

2. Dependencies between components

3. Validation steps

4. Rollback procedures

Format as JSON with this structure:

{{

"steps": [

{{

"id": 1,

"action": "create_vpc",

"description": "Create VPC with public/private subnets",

"tool": "terraform",

"dependencies": [],

"validation": "verify VPC and subnets exist"

}}

]

}}

"""

response = genai.GenerativeModel('gemini-2.0-flash-exp').generate_content(planning_prompt)

return json.loads(response.text)

def execute_plan(self, plan):

"""Execute the step-by-step plan"""

results = []

for step in plan['steps']:

print(f"Executing: {step['description']}")

# Check dependencies

if not self.check_dependencies(step['dependencies'], results):

print(f"Dependencies not met for step {step['id']}")

continue

# Execute the step

result = self.execute_step(step)

results.append(result)

# Validate

if not self.validate_step(step, result):

print(f"Validation failed for step {step['id']}")

self.rollback_step(step)

break

return resultsThe Magic in Action: Real-World Example

Let’s see how this works with a real request:

User Input (Simple UI)

"Deploy a production-ready EKS cluster for my e-commerce application.

It needs to handle 10,000 concurrent users and be highly available."Agent’s Internal Process

Step 1: Task Breakdown

{

"steps": [

{

"id": 1,

"action": "create_vpc",

"description": "Create VPC with multi-AZ subnets",

"tool": "terraform",

"dependencies": []

},

{

"id": 2,

"action": "create_eks_cluster",

"description": "Create EKS cluster with managed node groups",

"tool": "terraform",

"dependencies": [1]

},

{

"id": 3,

"action": "configure_autoscaling",

"description": "Setup cluster autoscaler and HPA",

"tool": "kubectl",

"dependencies": [2]

},

{

"id": 4,

"action": "deploy_load_balancer",

"description": "Install AWS Load Balancer Controller",

"tool": "kubectl",

"dependencies": [2]

},

{

"id": 5,

"action": "validate_deployment",

"description": "Test cluster functionality and scaling",

"tool": "validate",

"dependencies": [2,3,4]

}

]

}Step 2: Execution

The agent now executes each step automatically:

def execute_step(self, step):

"""Execute individual step with appropriate tool"""

if step['tool'] == 'terraform':

# Generate terraform code based on step

standards = self.memory.recall_standards(step['description'])

tf_prompt = f"""

Generate Terraform for: {step['description']}

Following these standards: {standards}

Consider high availability for 10,000 concurrent users.

"""

tf_code = genai.GenerativeModel('gemini-2.0-flash-exp').generate_content(tf_prompt)

# Save and apply

self.save_terraform_file(step['id'], tf_code.text)

return self.run_terraform(f"step_{step['id']}")

elif step['tool'] == 'kubectl':

# Generate kubectl commands

k8s_prompt = f"""

Generate Kubernetes manifests for: {step['description']}

Cluster should handle 10,000 concurrent users.

"""

manifests = genai.GenerativeModel('gemini-2.0-flash-exp').generate_content(k8s_prompt)

return self.run_kubectl(manifests.text)The Beautiful UI

Here’s a simple Streamlit interface that makes this incredibly user-friendly:

import streamlit as st

def main():

st.title("🤖 AI Infrastructure Agent")

st.write("Describe your infrastructure needs, and I'll build it for you!")

# User input

user_request = st.text_area(

"What do you want to deploy?",

placeholder="Deploy a production-ready EKS cluster for my e-commerce app..."

)

if st.button("Build Infrastructure"):

if user_request:

agent = InfrastructureAgent()

# Show planning phase

with st.spinner("Planning deployment..."):

plan = agent.break_down_task(user_request)

st.success("Plan created!")

st.json(plan)

# Execute with progress

progress_bar = st.progress(0)

status_text = st.empty()

for i, step in enumerate(plan['steps']):

status_text.text(f"Executing: {step['description']}")

result = agent.execute_step(step)

progress_bar.progress((i + 1) / len(plan['steps']))

if result['success']:

st.success(f"✅ {step['description']}")

else:

st.error(f"❌ {step['description']}: {result['error']}")

break

st.balloons()

st.success("Infrastructure deployed successfully!")

if __name__ == "__main__":

main()Advanced Agent Capabilities

Once you have the basic agent, you can enhance it with more sophisticated capabilities:

1. Cost Optimization Agent

def optimize_costs(self, infrastructure_plan):

"""Analyze and optimize infrastructure costs"""

cost_prompt = f"""

Analyze this infrastructure plan for cost optimization:

{infrastructure_plan}

Suggest:

1. Spot instances where appropriate

2. Right-sizing recommendations

3. Reserved instance opportunities

4. Unused resource identification

"""

optimization = genai.GenerativeModel('gemini-2.0-flash-exp').generate_content(cost_prompt)

return self.apply_optimizations(optimization.text)2. Security Hardening Agent

def security_audit(self, deployed_infrastructure):

"""Automatically audit and harden security"""

security_prompt = f"""

Audit this infrastructure for security issues:

{deployed_infrastructure}

Check for:

1. Open security groups

2. Unencrypted resources

3. Missing IAM best practices

4. Public access configurations

Provide remediation commands.

"""

audit = genai.GenerativeModel('gemini-2.0-flash-exp').generate_content(security_prompt)

return self.apply_security_fixes(audit.text)3. Monitoring Setup Agent

def setup_monitoring(self, infrastructure):

"""Automatically configure monitoring and alerting"""

monitoring_prompt = f"""

Setup comprehensive monitoring for:

{infrastructure}

Include:

1. CloudWatch dashboards

2. Critical alerts

3. Log aggregation

4. Performance metrics

5. Cost monitoring

"""

monitoring_config = genai.GenerativeModel('gemini-2.0-flash-exp').generate_content(monitoring_prompt)

return self.deploy_monitoring(monitoring_config.text)The Agent Team Structure

class InfrastructureAgentTeam:

def __init__(self):

self.agents = {

'architect': ArchitectAgent(), # Plans infrastructure

'security': SecurityAgent(), # Ensures security compliance

'cost': CostOptimizationAgent(), # Optimizes costs

'deployment': DeploymentAgent(), # Executes deployments

'monitoring': MonitoringAgent(), # Sets up observability

'maintenance': MaintenanceAgent() # Handles updates & patches

}

def deploy_infrastructure(self, requirements):

"""Orchestrate multiple agents to deploy infrastructure"""

# Architect agent creates the plan

plan = self.agents['architect'].design_infrastructure(requirements)

# Security agent reviews and hardens

secure_plan = self.agents['security'].review_and_harden(plan)

# Cost agent optimizes

optimized_plan = self.agents['cost'].optimize_costs(secure_plan)

# Deployment agent executes

deployment = self.agents['deployment'].deploy(optimized_plan)

# Monitoring agent sets up observability

monitoring = self.agents['monitoring'].setup_monitoring(deployment)

return deployment, monitoringAgent Collaboration Example

User Request: “Deploy a microservices platform for 50 services”

Agent Collaboration:

Architect Agent: Designs service mesh, API gateway, shared services

Security Agent: Implements zero-trust networking, secret management

Cost Agent: Optimizes instance types, implements autoscaling

Deployment Agent: Executes in a blue-green deployment pattern

Monitoring Agent: Sets up distributed tracing, metrics, and alerting

Maintenance Agent: Schedules updates, implements backup strategies

The Results: From Hours to Minutes

Here’s what this agent-driven approach delivers:

Speed: Complete infrastructure deployment goes from days to minutes

Consistency: Every deployment follows your standards automatically

Intelligence: The agent learns from each deployment and improves

Autonomy: Complex tasks are broken down and executed without human intervention

Reliability: Built-in validation and rollback procedures

Real-World Impact

Traditional Approach:

2 days of planning and research

1 day of writing Terraform configurations

1 day of debugging and testing

4 hours of deployment and validation

Total: 4+ days

Agent Approach:

5 minutes describing requirements

10 minutes of agent planning and execution

5 minutes of validation and monitoring setup

Total: 20 minutes

The Agent Revolution (A Vision for Tomorrow)

The transformation from manual infrastructure management to intelligent agents represents what I believe will be the biggest shift in DevOps since the introduction of containers. This conceptual framework envisions us moving from:

Manual Configuration → Conversational Instructions

Single-Purpose Tools → Intelligent Agents

Reactive Operations → Proactive Automation

Human-Driven Tasks → Agent-Orchestrated Workflows

Your role isn’t disappearing — it’s evolving. In this vision, you become an Agent Director, orchestrating intelligent systems that understand your requirements, break down complex tasks, and execute them with precision.

The future belongs to those who can:

Design intelligent agent behaviors

Direct multi-agent teams

Validate autonomous operations

Optimize agent decision-making

For more insights on AI-driven DevOps practices, check my book PromptOps: From YAML to AI — a comprehensive guide to leveraging AI for DevOps workflows. The book covers everything from basic prompt engineering to building team-wide AI-assisted practices, with real-world examples for Kubernetes, CI/CD, cloud infrastructure, and more.